Autonomous AI Outpaces Medical Device Rules, Study Reveals

The Rise of Autonomous AI in Healthcare and the Need for Regulatory Evolution

Artificial intelligence (AI) is reshaping the landscape of healthcare, moving beyond traditional applications to introduce autonomous AI agents that have the potential to revolutionize medical practices. These advanced systems are designed to independently manage complex clinical workflows, offering significant benefits but also presenting new challenges for regulation and oversight.

Researchers at the Else Kröner Fresenius Center (EKFZ) for Digital Health at TUD Dresden University of Technology have identified a growing gap between the capabilities of these autonomous AI agents and the current regulatory frameworks in the US and Europe. Their findings, published in Nature Medicine, highlight the urgent need for innovative regulatory approaches that ensure both safety and the continued development of AI technologies in healthcare.

The Expansion of AI in Clinical Settings

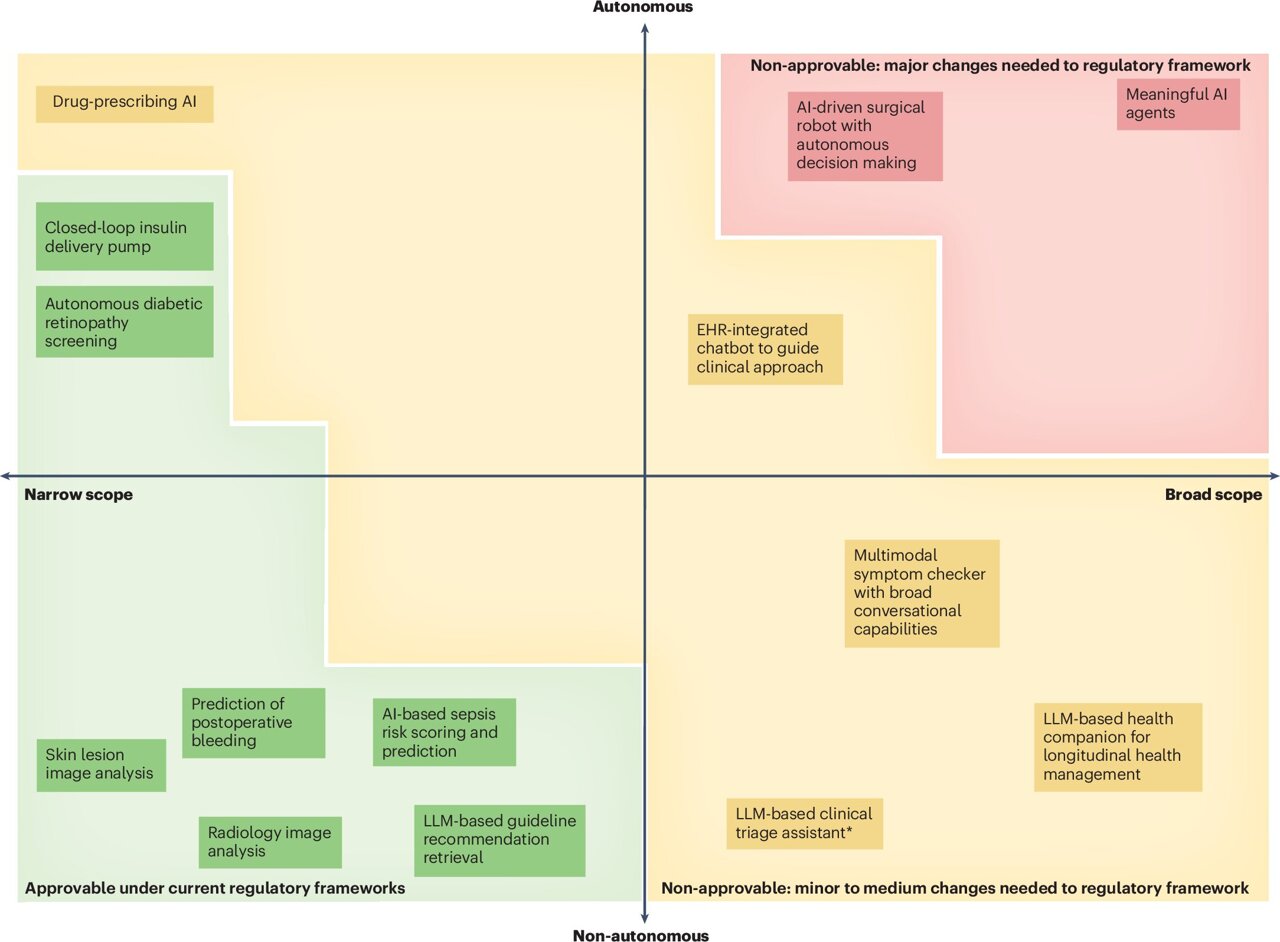

The use of large language models (LLMs) and generative AI (GenAI) in healthcare has seen a dramatic increase in recent years. Many of these technologies are already being applied in clinical settings, often qualifying as medical devices. However, their approval typically requires adherence to specific laws and regulatory standards. Recent approvals have demonstrated that applications with narrowly defined tasks can be successfully integrated into healthcare systems.

The next generation of AI systems, however, represents a significant shift. These autonomous AI agents are capable of managing complex, goal-directed workflows without direct human intervention. They consist of multiple interconnected components, including external databases, image analysis tools, note-taking systems, clinical guidance modules, and patient data management platforms. These systems are controlled by LLMs that handle decision-making, error correction, and task completion recognition.

Challenges to Existing Regulatory Frameworks

Current medical device regulations were developed for static, narrowly focused technologies that maintain human oversight and do not evolve after entering the market. In contrast, autonomous AI agents exhibit greater autonomy, adaptability, and scope. Their ability to execute complex workflows independently presents significant challenges for regulators and developers alike.

"Unlike earlier systems, AI agents are capable of managing complex clinical workflows autonomously," says Jakob N. Kather, Professor of Clinical Artificial Intelligence at the EKFZ for Digital Health at TUD and oncologist at the Dresden University Hospital. "This opens up great opportunities for medicine—but also raises entirely new questions around safety, accountability, and regulation that we need to address."

Rethinking Regulatory Approaches

To facilitate the safe and effective implementation of autonomous AI agents in healthcare, regulatory frameworks must evolve beyond static paradigms. Oscar Freyer, lead author of the publication and research associate in the team of Professor Stephen Gilbert, emphasizes the need for adaptive regulatory oversight and flexible alternative approval pathways.

Immediate adaptations include extending enforcement discretion policies, where regulators acknowledge a product qualifies as a medical device but choose not to enforce certain requirements. Alternatively, systems that serve a medical purpose but fall outside traditional medical device regulation could be classified differently.

Medium- and Long-Term Solutions

Medium-term solutions involve developing voluntary alternative pathways (VAPs) and adaptive regulatory frameworks that supplement existing approval processes. These frameworks would shift from static pre-market approval to dynamic oversight using real-world performance data, stakeholder collaboration, and iterative updates. In cases of misconduct, devices may be transferred to established pathways.

Long-term solutions include regulating AI agents similarly to the qualifications of medical professionals. In this model, regulation would be carried out through structured training processes, allowing systems to gain autonomy only after demonstrating safe and effective performance.

While regulatory sandboxes offer some flexibility for testing new technologies, they are not scalable for widespread deployment due to the resources and commitment required by regulatory authorities.

The Path Forward

The researchers argue that meaningful implementation of autonomous AI agents in healthcare will likely remain impossible in the medium term without substantial regulatory reform. VAPs and adaptive pathways are highlighted as the most effective and realistic strategies to achieve this goal.

Collaboration between regulators, healthcare providers, and technology developers is essential to create frameworks that meet the unique characteristics of AI agents while ensuring patient safety. As Stephen Gilbert, Professor of Medical Device Regulatory Science at the EKFZ for Digital Health at TU Dresden, states: "Realizing the full potential of AI agents in healthcare will require bold and forward-thinking reforms. Regulators must start preparing now to ensure patient safety and provide clear requirements to enable safe innovation."

Post a Comment for "Autonomous AI Outpaces Medical Device Rules, Study Reveals"

Post a Comment